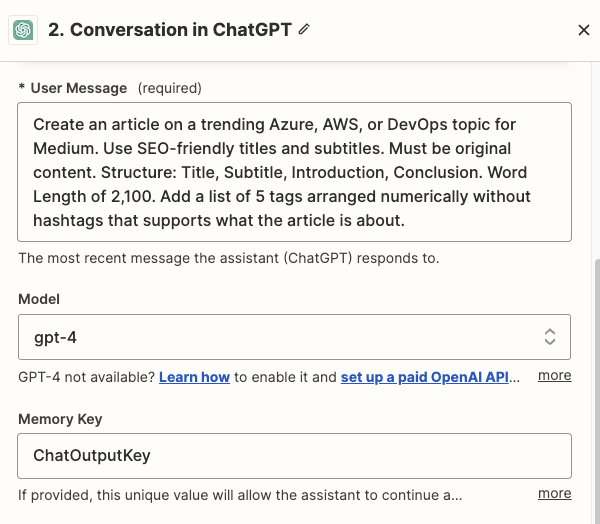

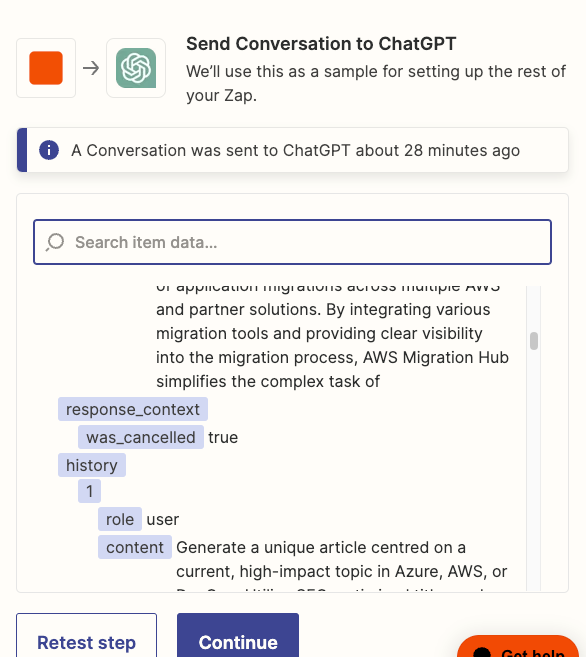

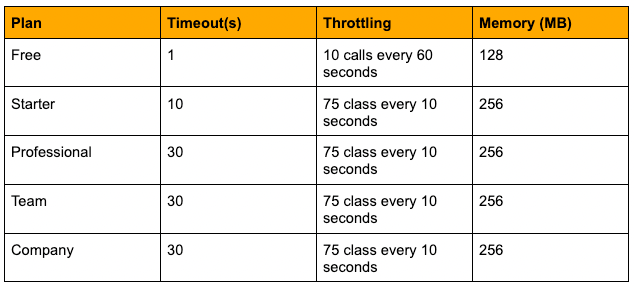

So I am new to user Zapier but seem to understand its limits so far. I am having ChatGPT generate an article barely only a second paragraph, its hitting a brick wall. This is within only 30 seconds or so also.

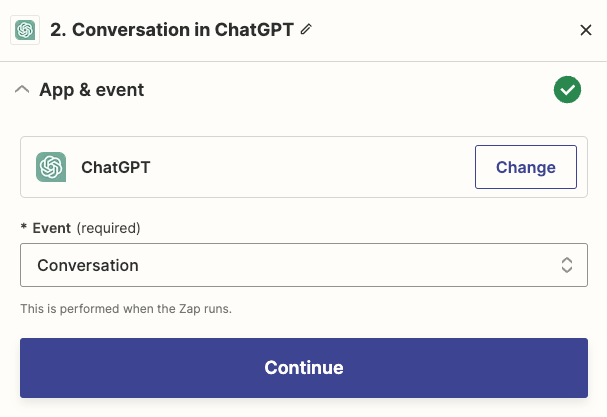

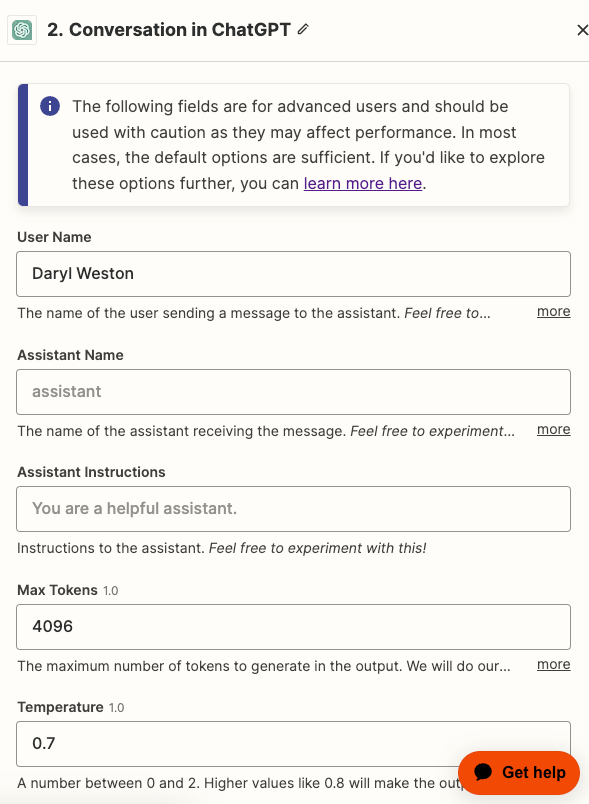

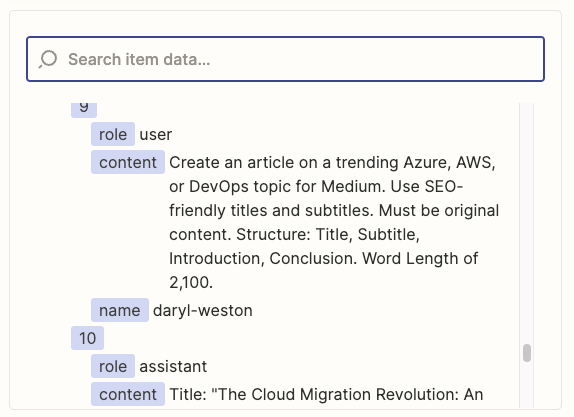

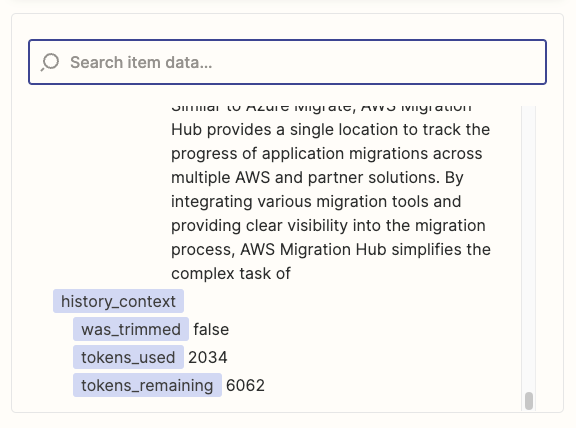

I keep getting was_cancelled false not true as I have read many times that would show timeout, it barely runs more then 30 seconds most times and whether it says true or false, either cuts off the response and then retries to ask again sometimes 3 times. It seemed to work at first and now giving multiple errors like this one. Whether I use ChatGPT3 Turbo or ChatGPT4 with massive amounts of tokens currently with ChatGPT3 I use 3800 or more. It cuts off and does this weird retry and gives me 3 outputs instead of giving me one long output.

Could you help me with understanding why this is, is this a Zapier limitation hit or OpenAPI limitation?