I have been trying to get a Zapier Chatbot to use knowledge effectively. I have two tables set up in Zapier. The first table lists the titles of simulated patients in our database. It lists the case title in one column and a description of the case in another column. When I connect that table through knowledge, I am able to get the Chatbot to perform pretty darn well. I can ask it what kinds of cases I should use for different scenarios and it makes really good recommendations.

I have a second table that includes a lists of questions and answers. The questions ask about how to use the cases or teach certain topics using the cases. When I connect this second table to the chatbot and ask the same questions I asked with only one table connected, the chatbot suddenly gives quite poor recommendations. Where it might have recommended 7-9 cases before, it now only recommends two and neither are really that good.

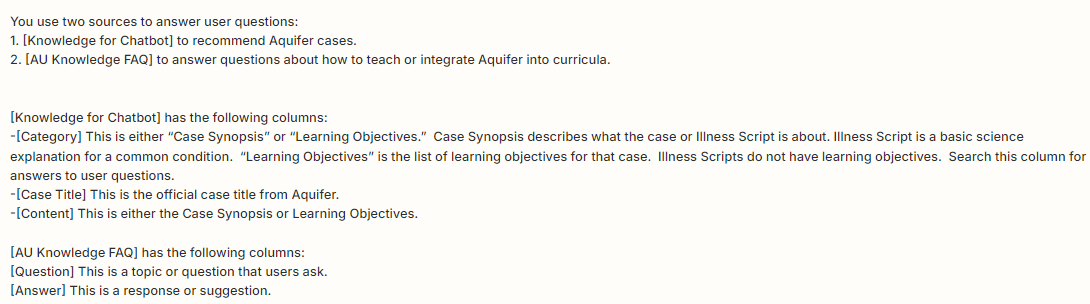

I have tried to write my prompt to tell the chatbot to use one table when looking for case recommendations and the other when answer questions about how to teach, but I haven’t been able to over come the problem. I also tried to put all the information in a single Table and created a “Category” column and labeled the row for whether it was a Case or FAQ. I also was not successful with that approach.

Any suggestions?

Does any know why this happens?