Hi all,

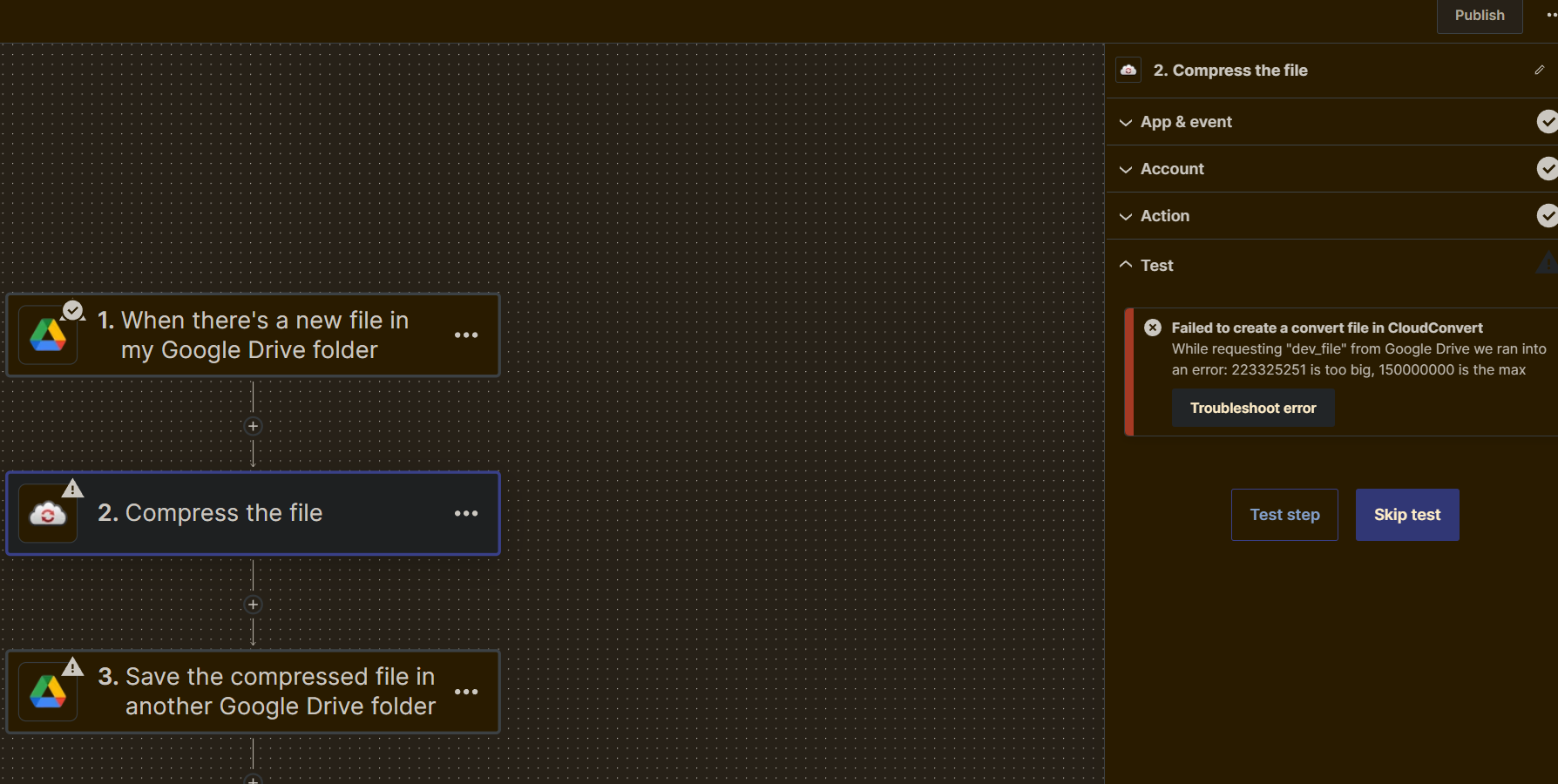

Trying to get a simple Zap going here to convert files on my Google Drive folder if they’re over 150MB and place the converted ones in a different folder (so they in turn can be used by another Zap that sends them to my Youtube channel).

It picks up the trigger fine on (when a new file is added to my Google Drive folder), but fails when trying to use the CloudConvert API with:

Failed to create a convert file in CloudConvert

While requesting "dev_file" from Google Drive we ran into an error: 223325251 is too big, 150000000 is the max

Is this kind of a catch-22, because the 150MB limit for Google Drive is still there when trying to reduce the file itself? If I convert the file manually on CloudConvert’s site, it works fine and saves it back to my Google Drive at a smaller size of around 40MB with the test file I used with a quality reduction.

Over a few nights, I tried to look into Google Scripts instead with AI’s help, and at one point got it going there but the resulting file was still the same size so the conversion didn’t seem to do anything even though the file was passed down (don’t have a screenie for this because I tried so many iterations of the Google Script while trying to teach AI to include all the code and ensure it actually worked).

Is the best approach to manually split my file to under 150MB each time before I send it to my Google Drive folder? Or I could pay for Zapier but my Zaps are so basic for now I don’t think it’s worth it. It only applies to my source replay files usually anyway (about 1 min long) so could just trim them to the last 30 seconds or so locally which does it.