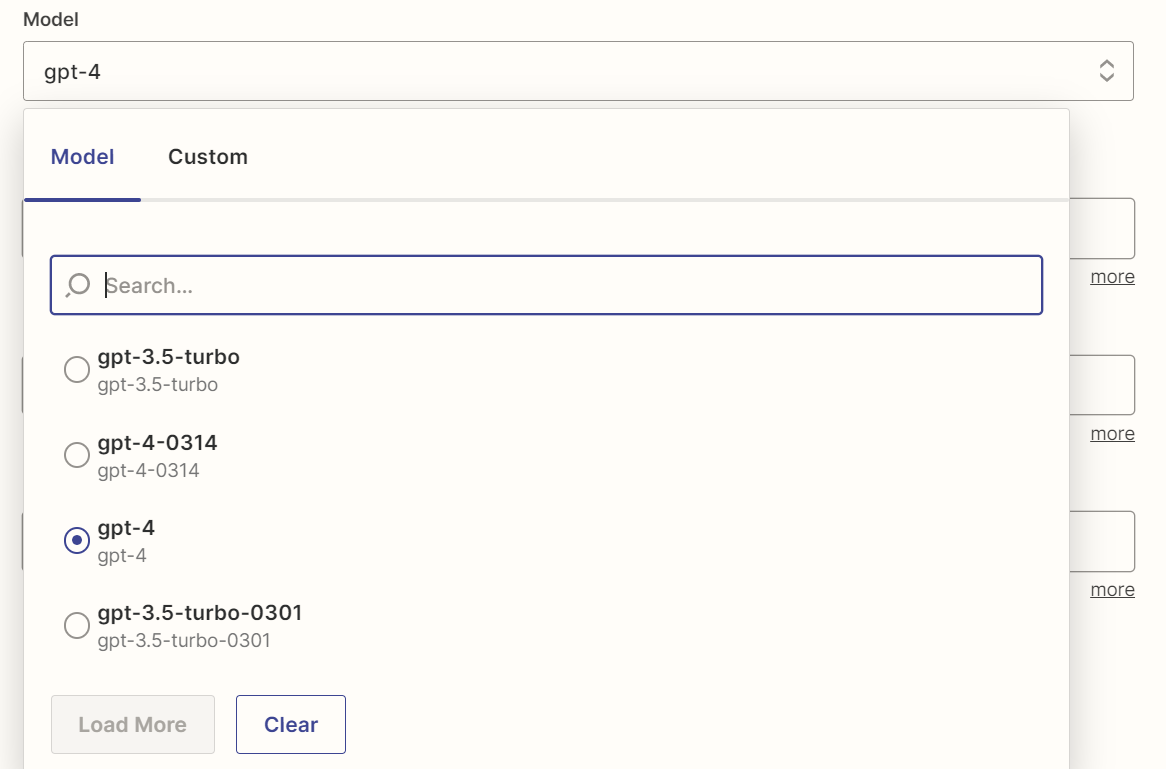

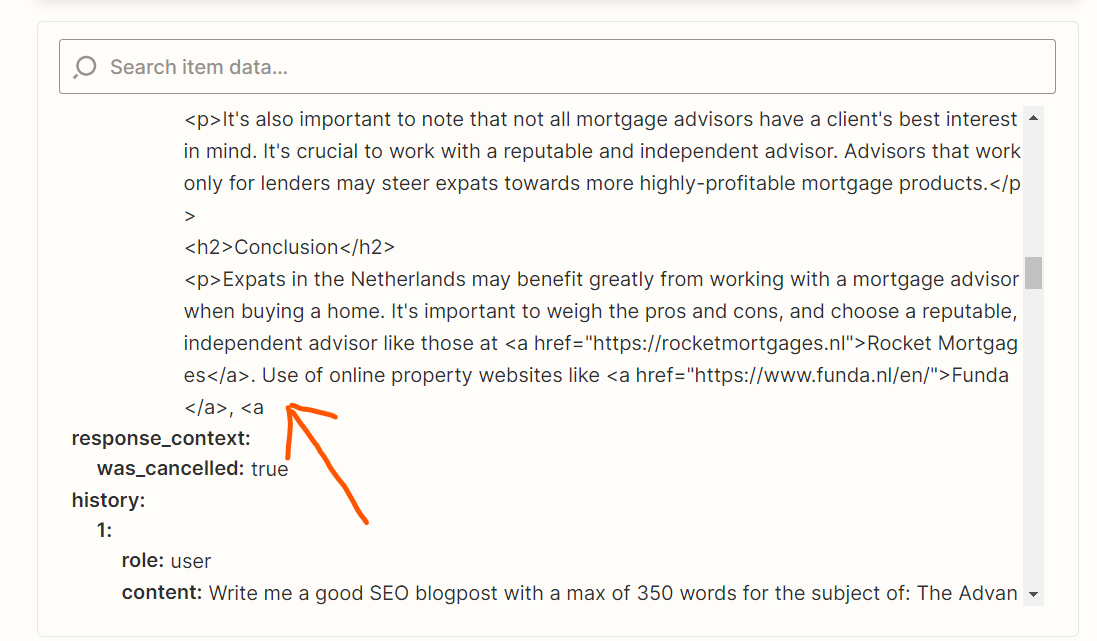

The chat GPT conversation app does not allow you to set the max token length. By default, I believe its set to 256 when you go into playground. Has anyone found a fix for this? Or know the default its set to in zapier?

The chat GPT conversation app does not allow you to set the max token length

Best answer by SamB

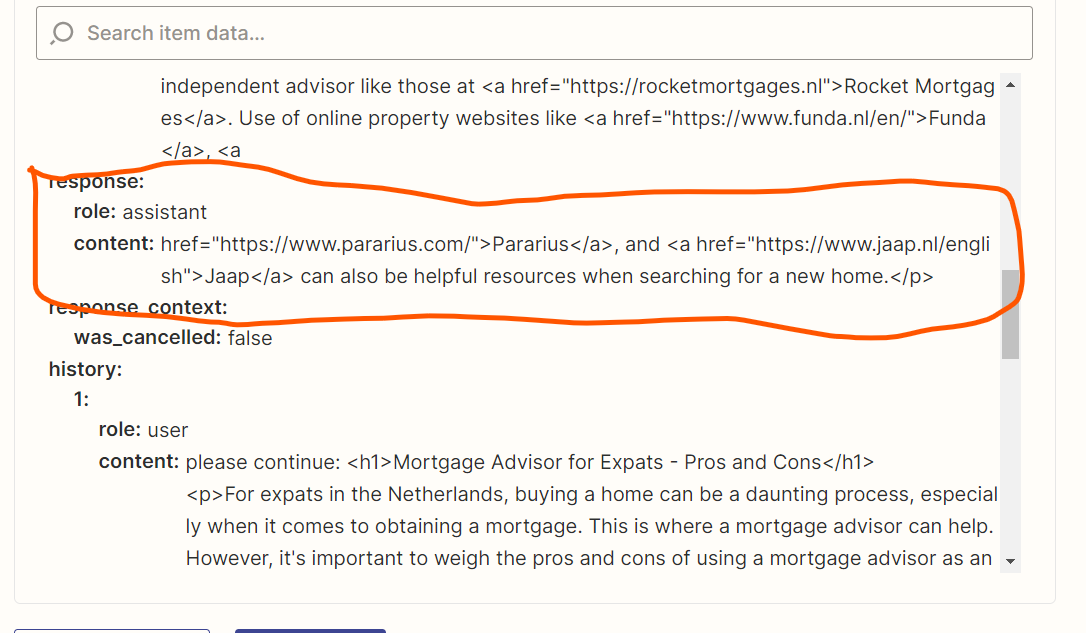

Hi friends! Just following up with a update here to confirm that the feature request to remove the cutoff was closed recently! 😁🎉

In case you missed the email notification from us, here’s what you need to know:

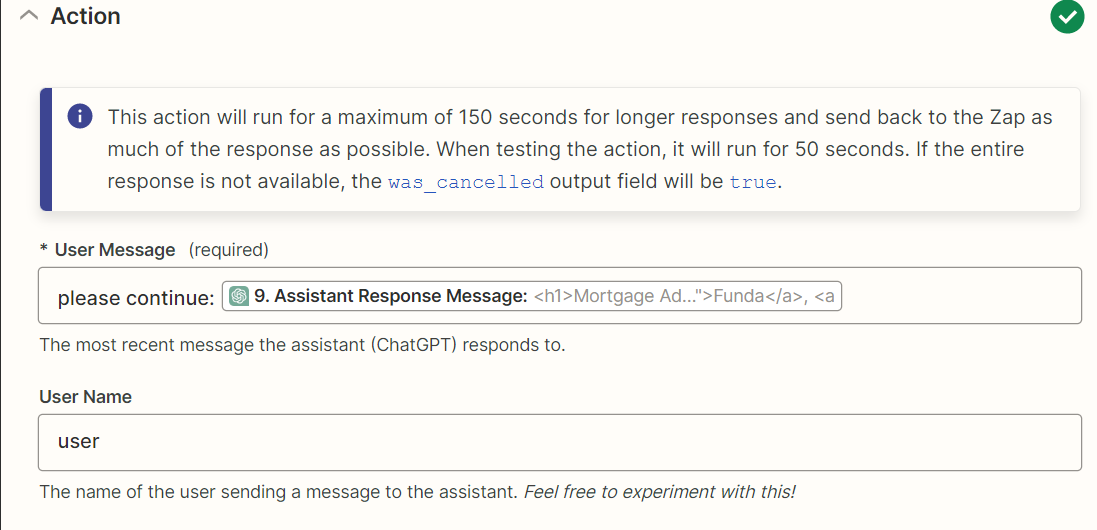

“We have implemented a new version of the connection that can take up to 15 minutes to process, so there should no longer be any cut off messages in your zap runs. When testing the Zaps in the editor, you may still find that the response is cut off, because we still time out that call at 50 seconds, so that users can continue to setup their Zaps effectively.

As part of this change, you will no longer see the field `was_cancelled` set to true after a ChatGPT step runs, only during Zap testing. I hope this helps you in automating your work on Zapier.”

Happy Zapping! ⚡

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.