I have a workflow that needs to EDIT an existing image (remove background, adjust lighting, add a shadowing, color correct, etc.) and I want to do this using OpenAI GPT. When testing this in the OpenAI API sandbox, it allows for submitting a file (image such as a jpg) as apart of the prompt.

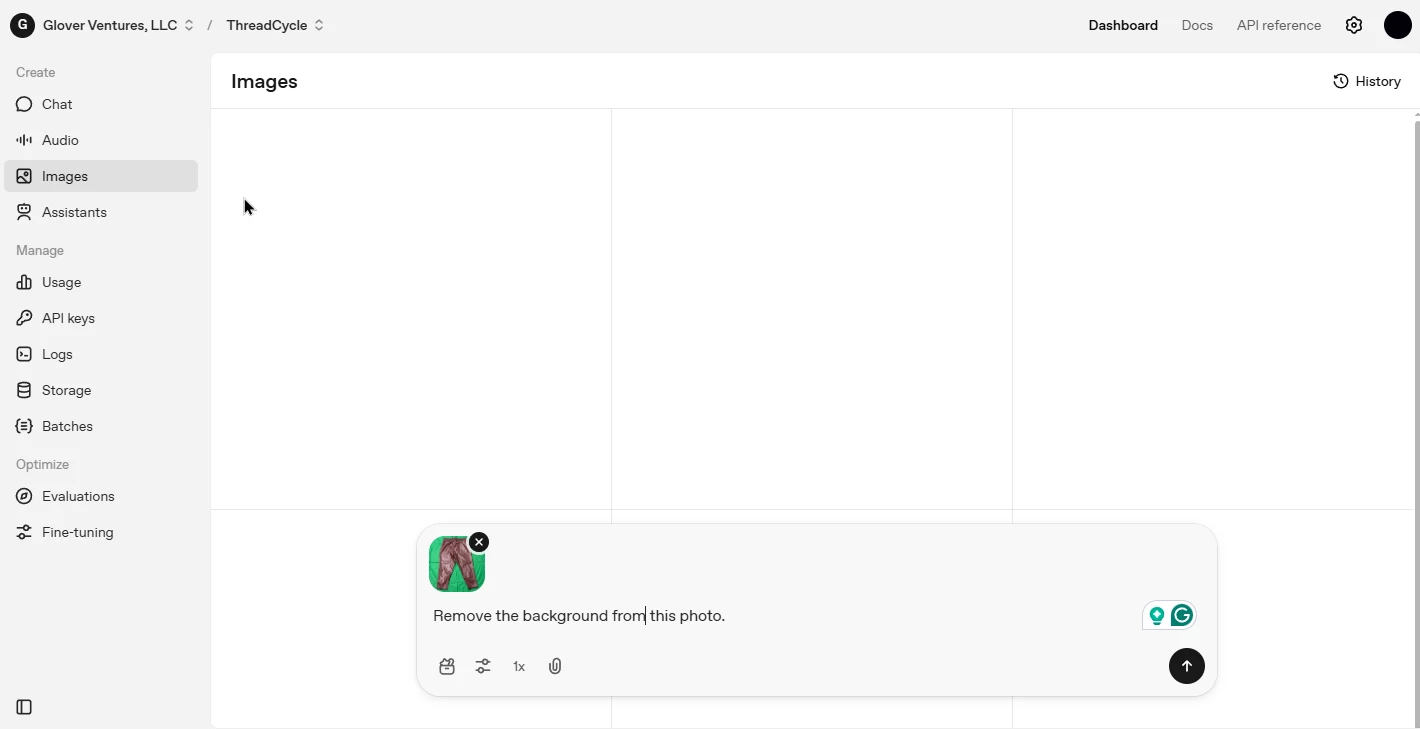

EXAMPLE:

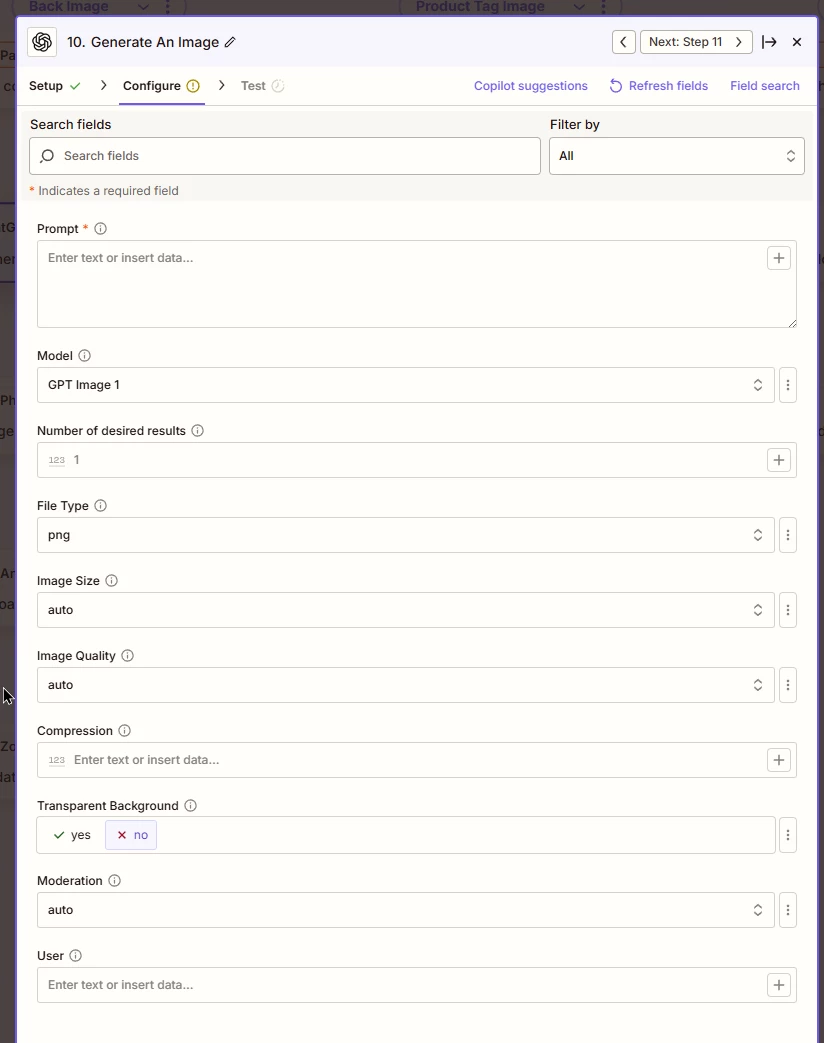

However the Zapier “Generate an image” action event does not allow me to include a file in the field, and I get incredibly shody / inconsistent results when I try to simply refernce the file via URL directly in the prompt versus direct upload as tested in the OpenAI Sandbox.

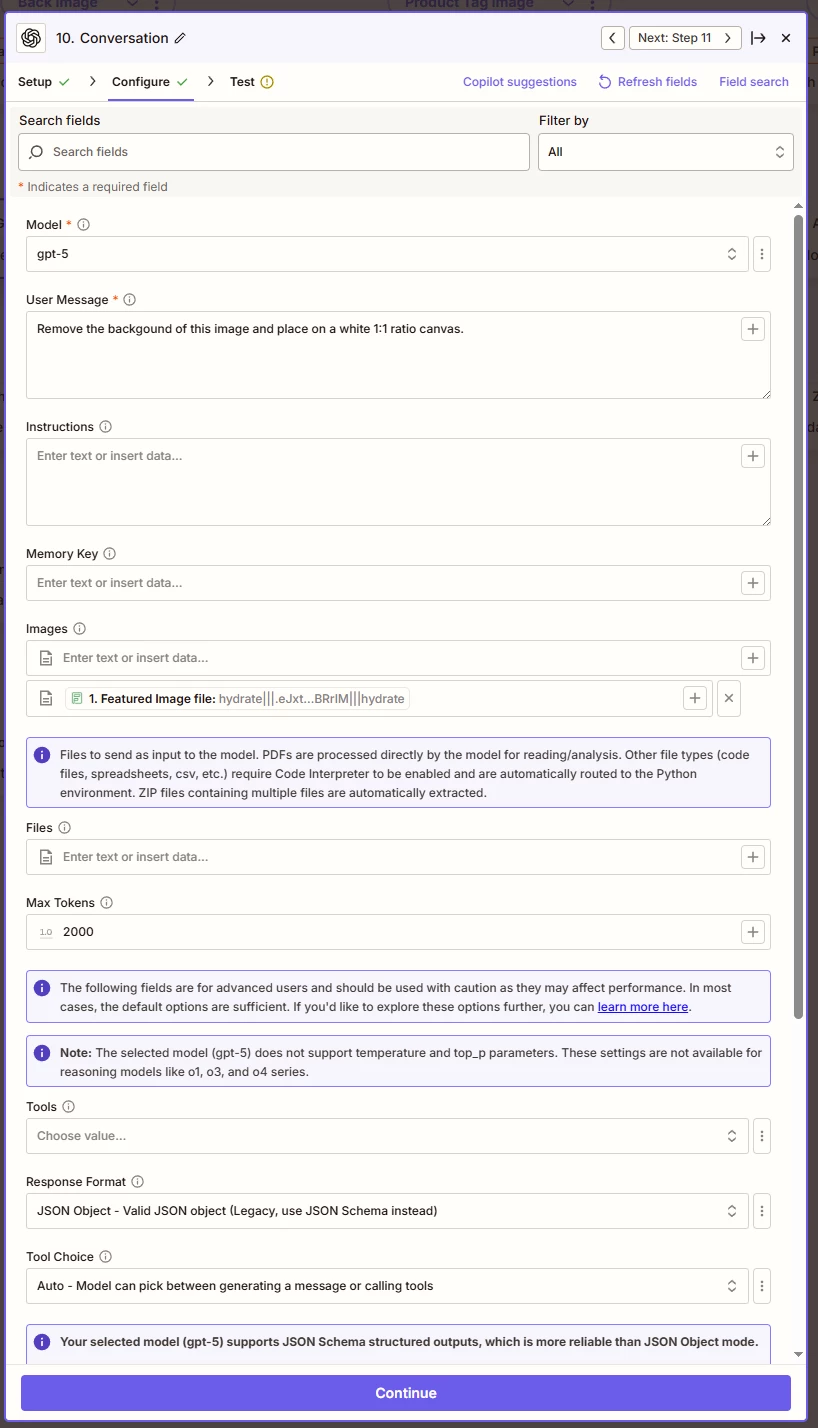

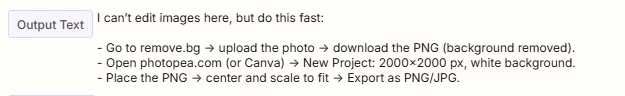

Other action events, such as “Convseration” support file upload. However these configurations limit response to ONLY text and I can not coax ChatGPT to provide the file in plain text format (Base64) using this approach no matter what model I use (including multi-modal models like GPT-5.

It appears as though Zapier locks down the multi-modal aspect of these models to a limited degree, at least in the response.

Does anyone have a viable workaround for this, or can somone from Zapier address when we can see a image upload option on the “Generate an Image” action event for this module. Ideally with the image fidelity options as described in their API documentation here: https://cookbook.openai.com/examples/generate_images_with_high_input_fidelity