Hi,

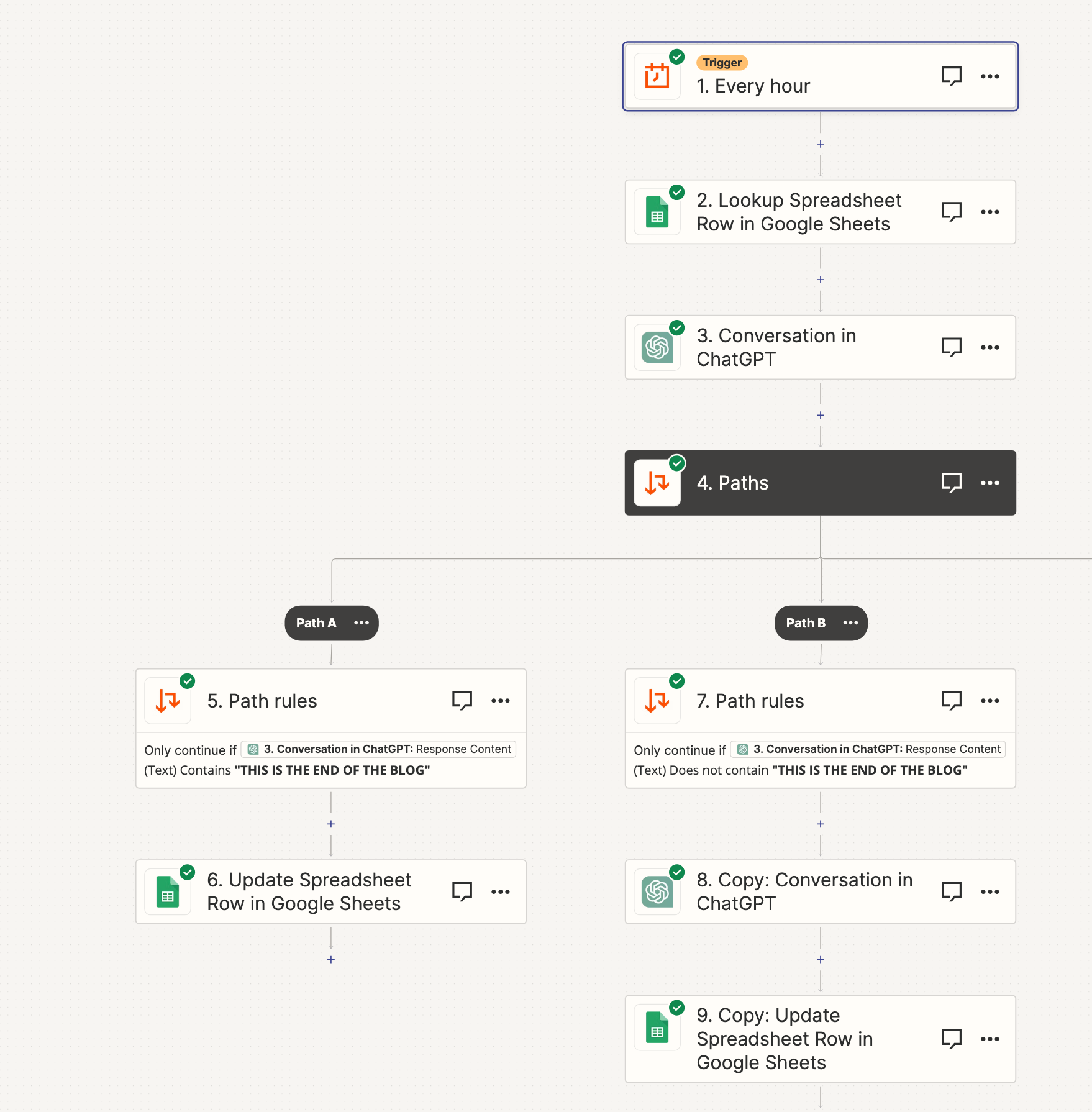

I am trying to create an automation to update and rewrite exsisting blog posts on our blog.

I am organizing my data in Google Sheets.

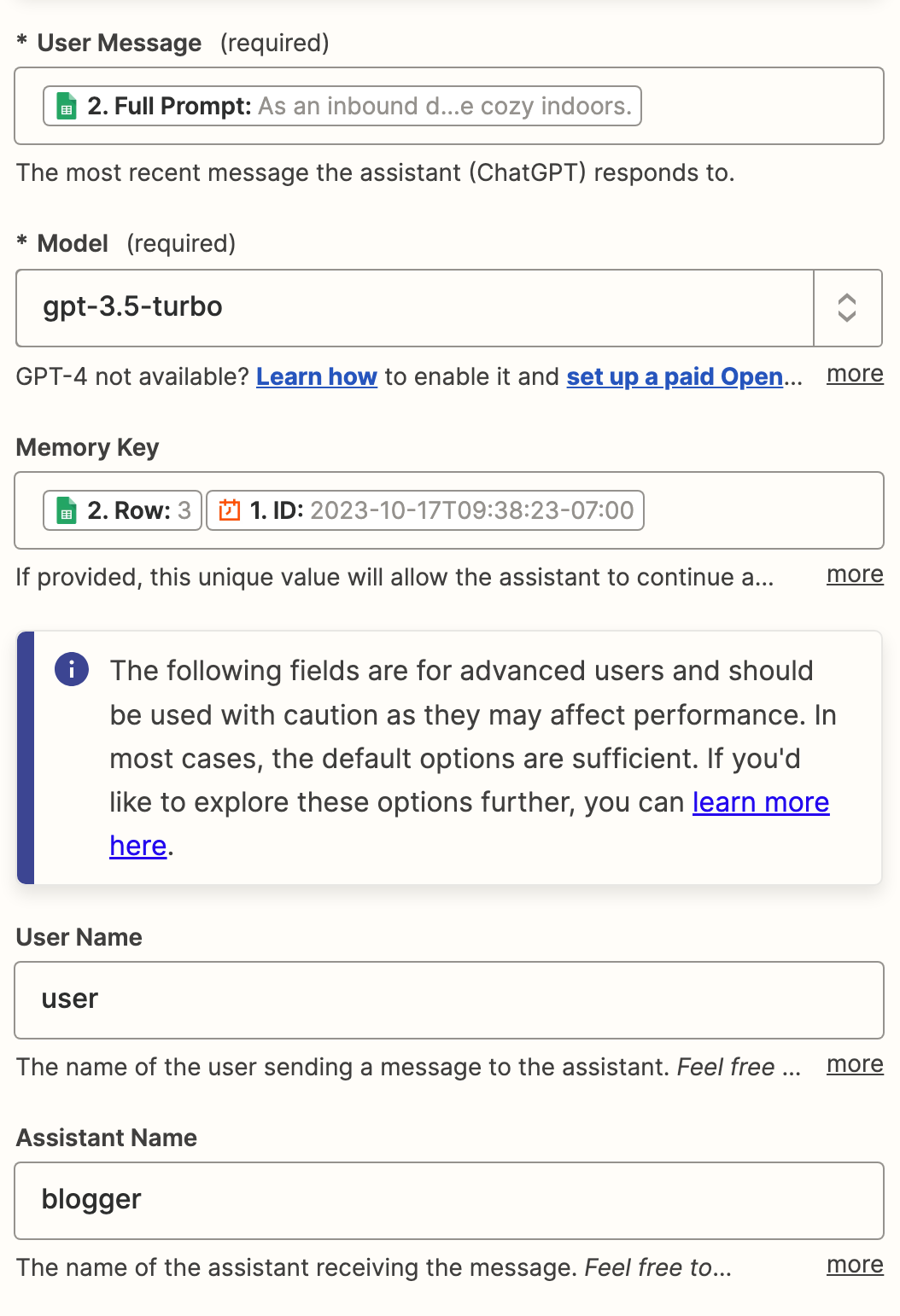

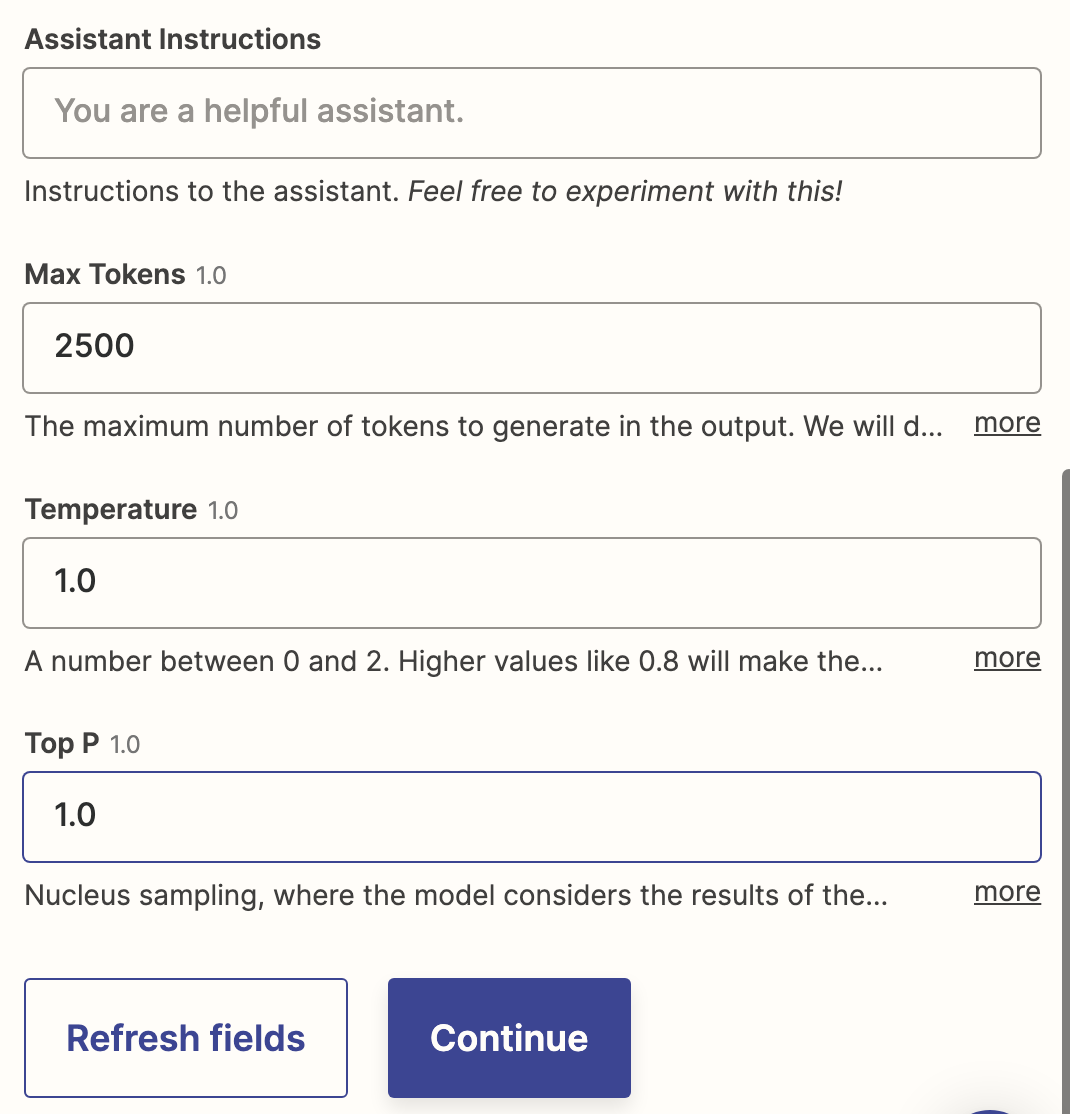

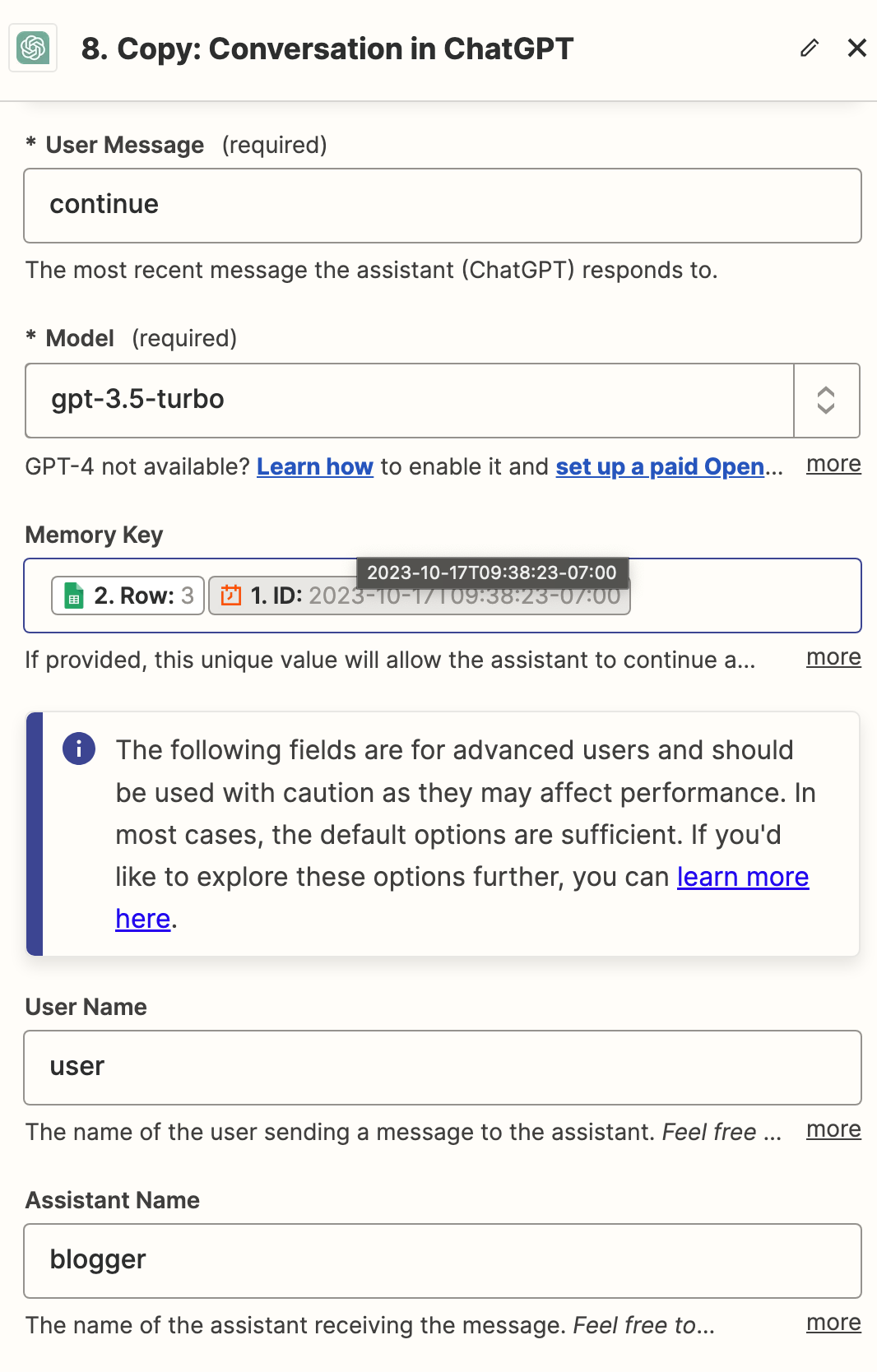

When I get to the first ChatGPT zap element I input to complete prompt. I use a memory key: [row number of my sheet][time/date element from trigger] (should be unique every time.)

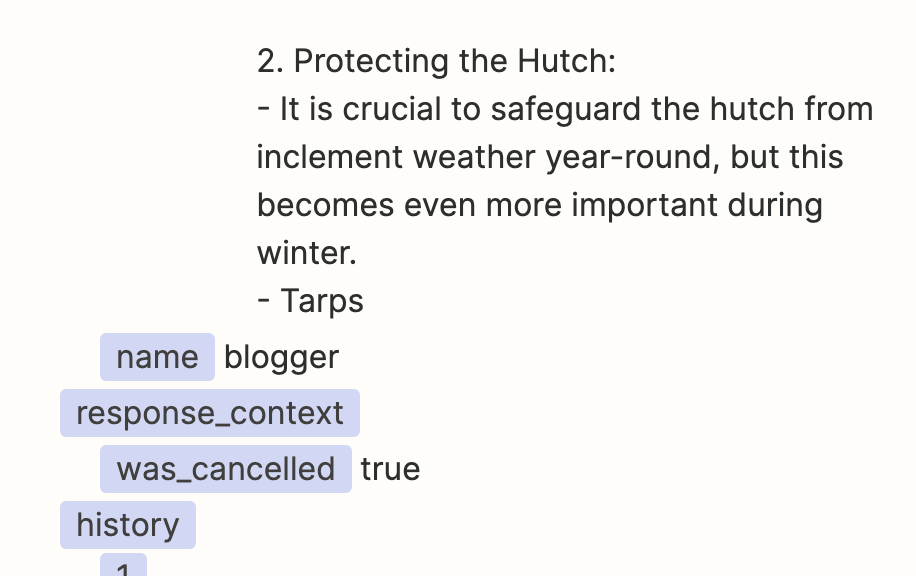

The response times out, so I get a “true” in the was_cancelled field. However, the first 300-400 words of the response are captured.

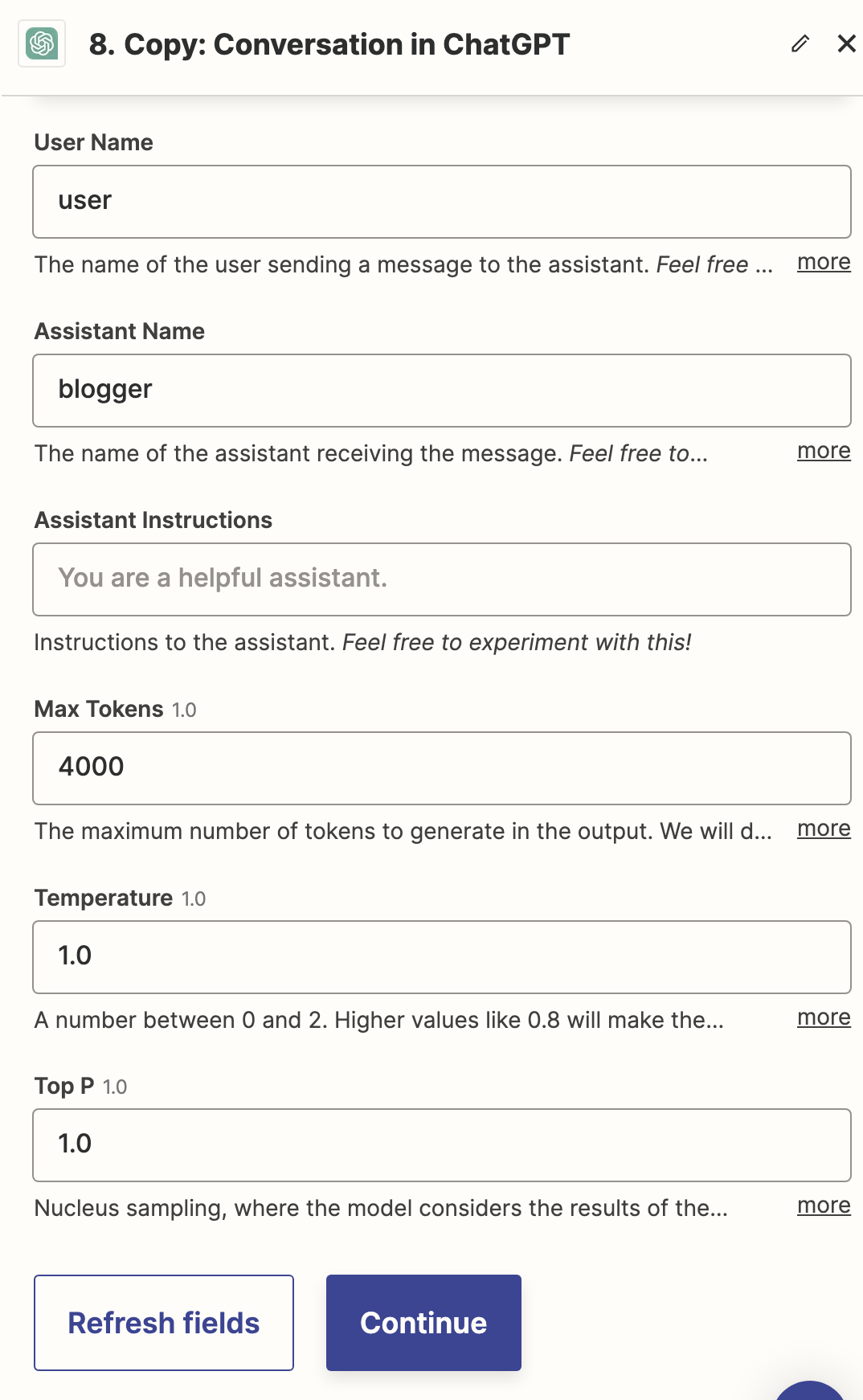

I added another ChatGPT zap element with the intent on prompting the AI to “continue” as I would in the live on site chat functionality.

So for the second ChatGPT zap element I input just “continue” in the user message. I use the same memory key as in the first element. I get an output like this:

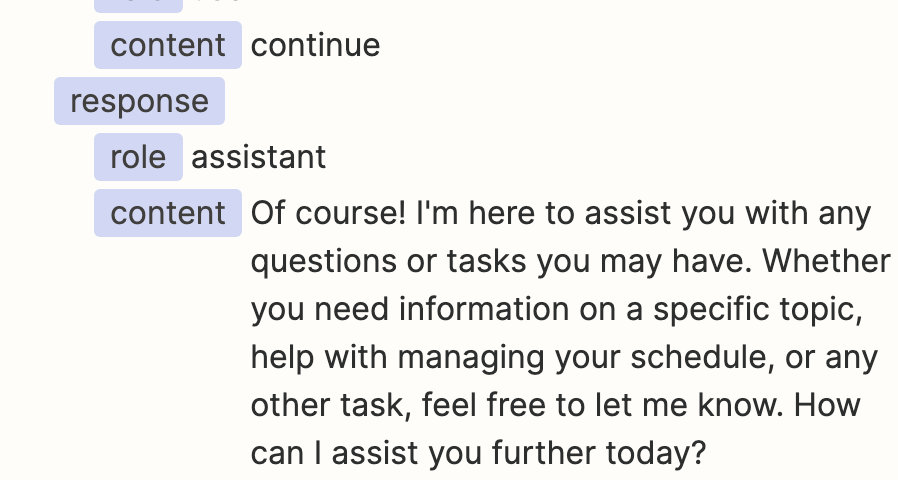

“Certainly! In our previous conversation, we were discussing how I can assist you. How may I help you today?”

It appears that all of the history is in the test output, but it will not output the continuation of the blog that was cut off.

Any help would be appreciated. Thanks.

Jason