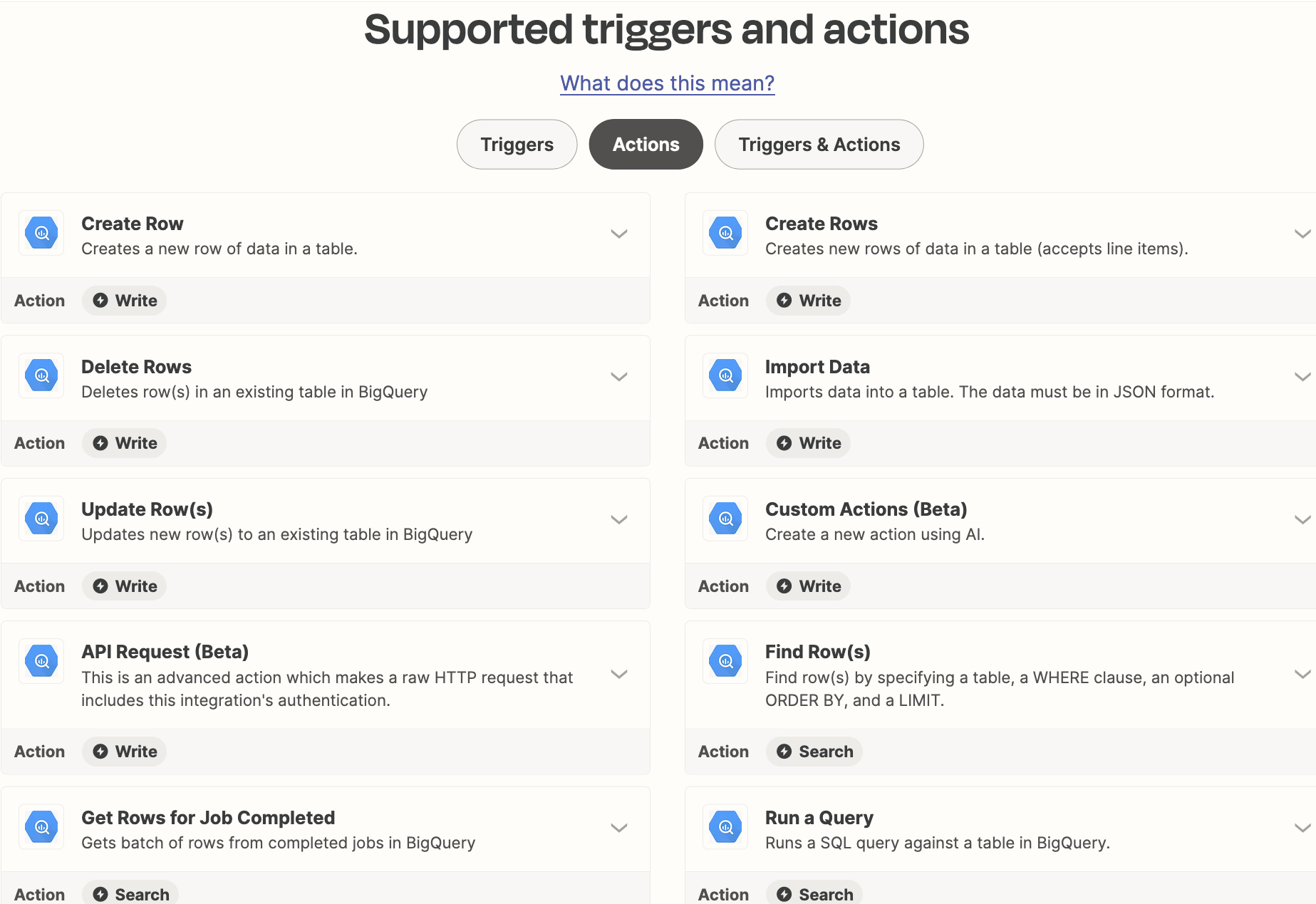

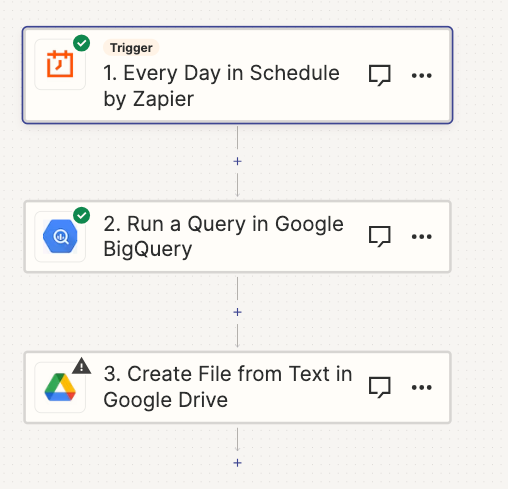

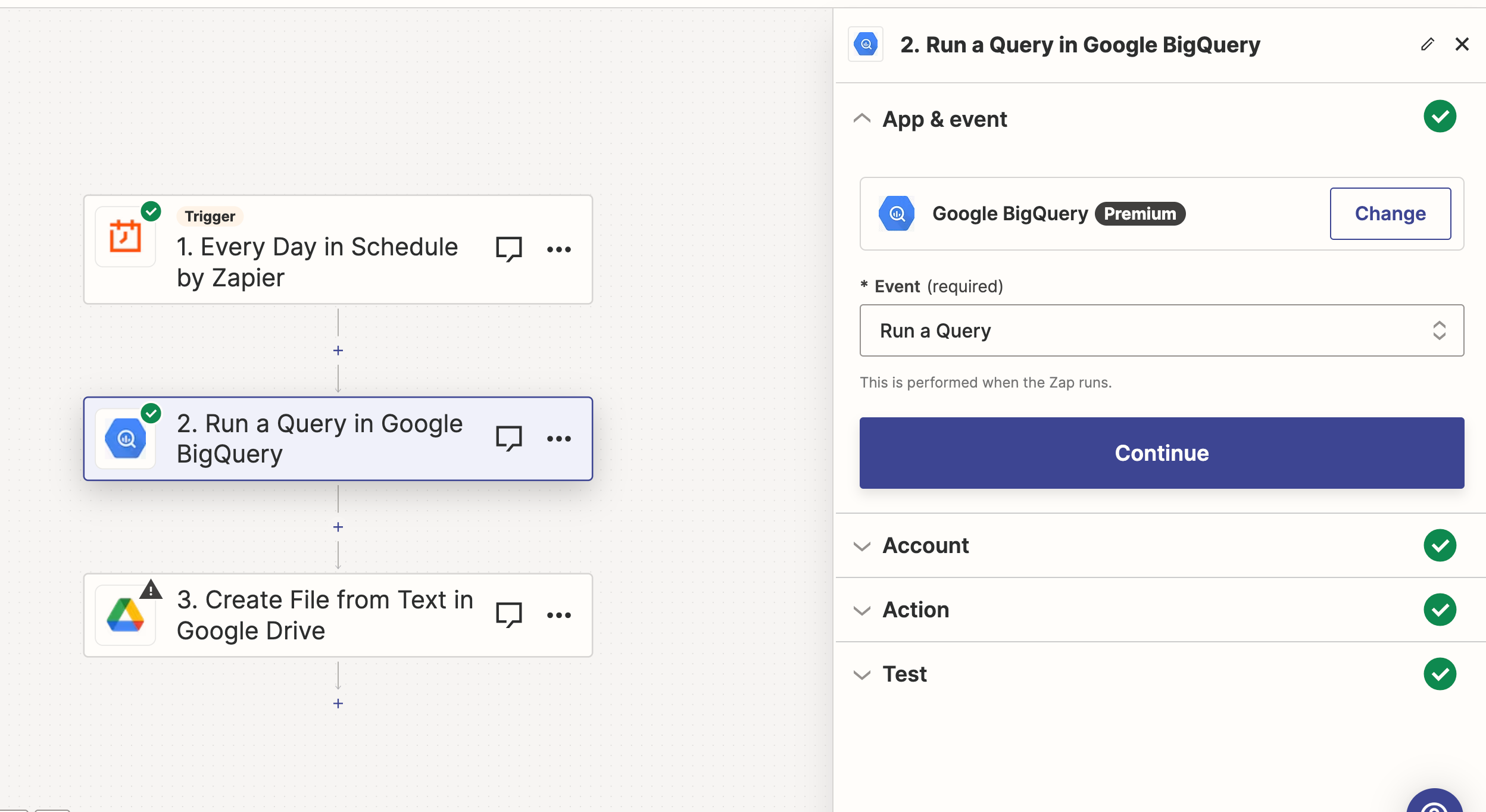

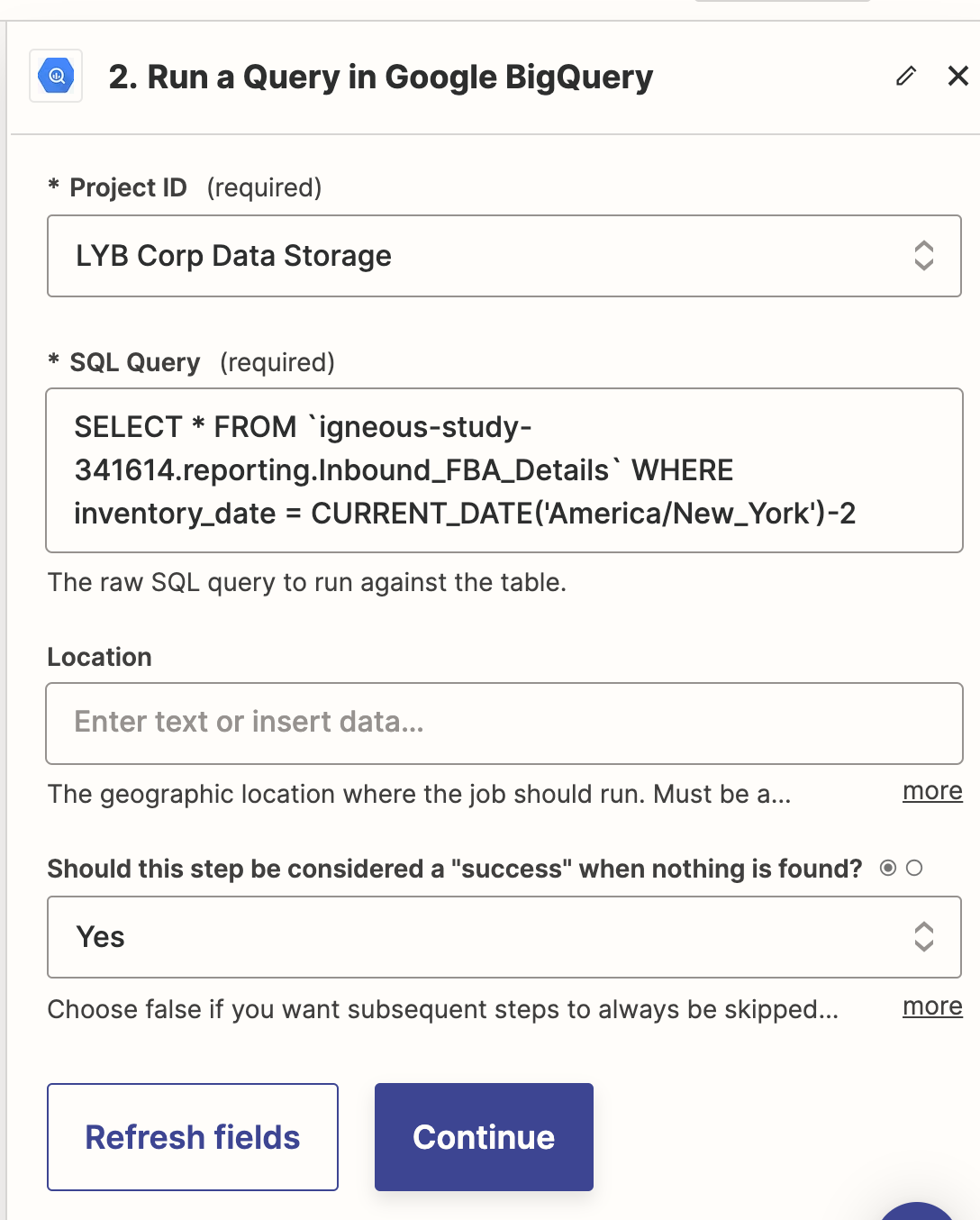

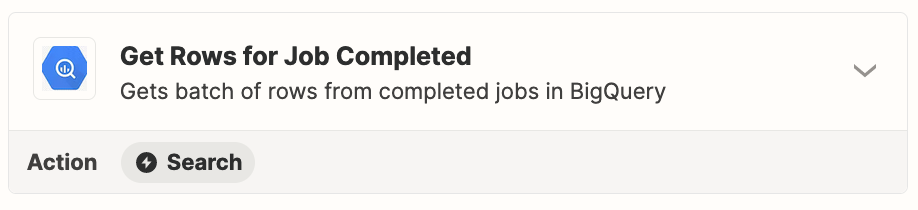

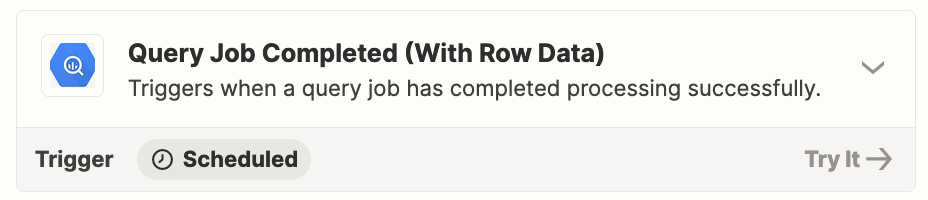

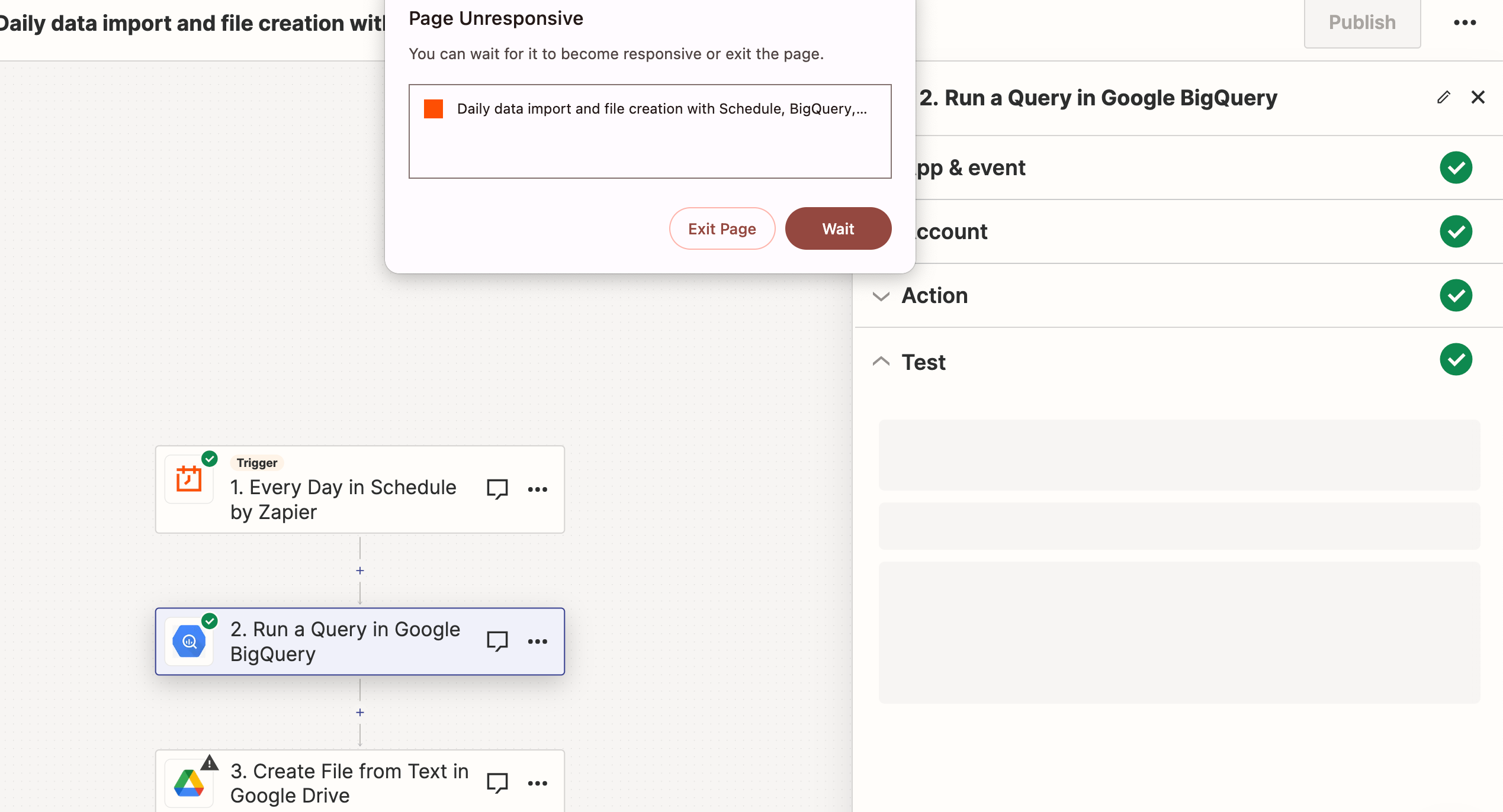

I want to run a query on a schedule and save it to a new google sheet in a specified folder every day. I would want to sheet to be name ABC_{DATE_TIME} where date time would be the date and time it was created.

When using googles connected sheets it overwrites the data and it is limited 50K rows so I am looking for a no or low code solution using zapier.

Thanks