Hey All.

A problem that many have been running into as AI becomes part of more workflows is the token limits of the different LLM models. To help work around this, we’ve released a new beta Text transform for Formatter - Split Text into Chunks for AI Prompt (beta). Chunks being the AI parlance for a segment of text that fits under your LLM model’s token limits.

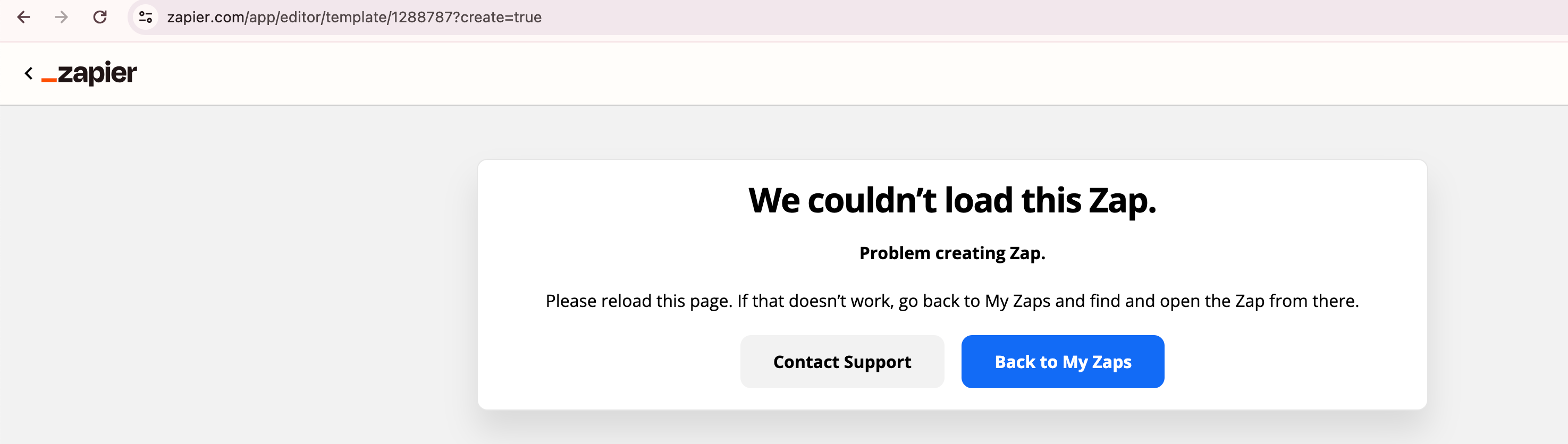

We have some initial help for this here, and have included a Zap Template that can help get you started. It’s a rather complex workflow that we’d like to make simpler over time.

If you build out a Zap using this transform, we’d love feedback on how it goes.

Kirk