The chat GPT conversation app does not allow you to set the max token length. By default, I believe its set to 256 when you go into playground. Has anyone found a fix for this? Or know the default its set to in zapier?

Hi

Welcome to the Community! I'm sorry you’re having trouble with your Zap.

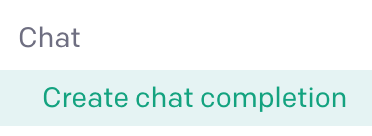

This model’s maximum context length is 4096 tokens.

You can see more on tokens and how they are counted here: https://help.openai.com/en/articles/4936856-what-are-tokens-and-how-to-count-them.

Hi

Thanks for trying to help out here.

I believe I got the same problem as

Me personally, I'd happily set that limit by default to 1000 to retrieve the full answer from the playground. As it is now, with the limitation of 256 tokens, the answer I receive seems cut off halfway through.

My question is, is it possible to change (increase) that default limitation of 256 tokens to a higher limitation? That would solve my problem of receiving halfway cut off answers from the playground.

Hi !

Same issue here. Most of my chatgpt answers are cut off..

It seems tokens count includes prompt + answer which might explain my issue. Anyone has an idea on how to bypass this ?

Thanks !

Hi

The workaround would be to use the OpenAI API for Chat: https://platform.openai.com/docs/api-reference/chat/create

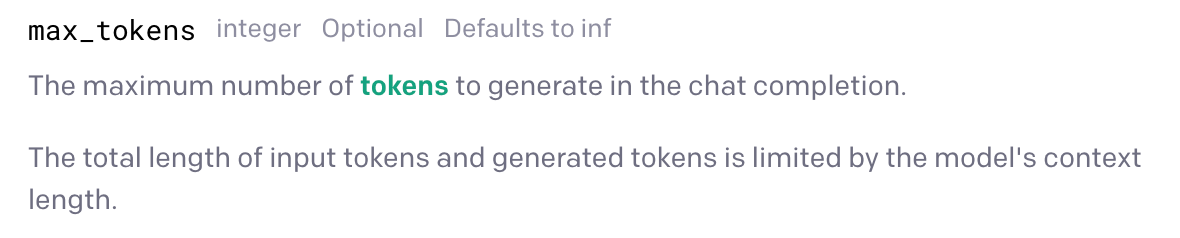

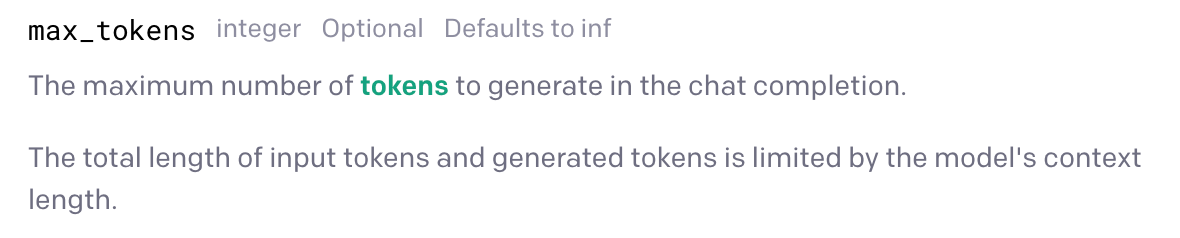

You might be able to use this Zap action: OpenAI - API Request

OR App APIs can be used in Zaps with these apps:

Hi ! Same issue here. Most of my chatgpt answers are cut off..

I believe Zapier could give us the option to adjust the token amount right after we set the temperature. As I non-developer/programmer user of Zapier, it doesn't seem to be a very challenging update.

Alternativelly, here os another option Zapier’s team members could look into:

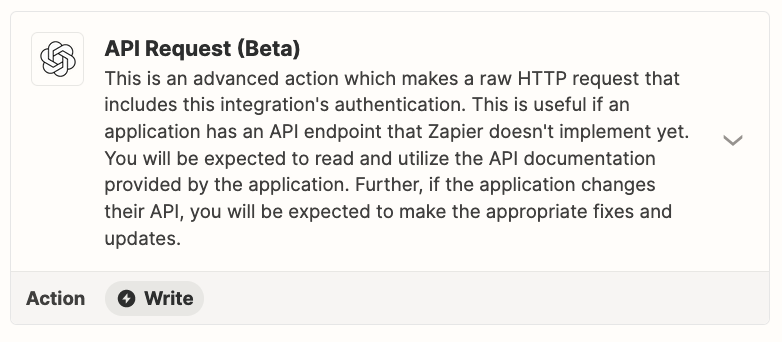

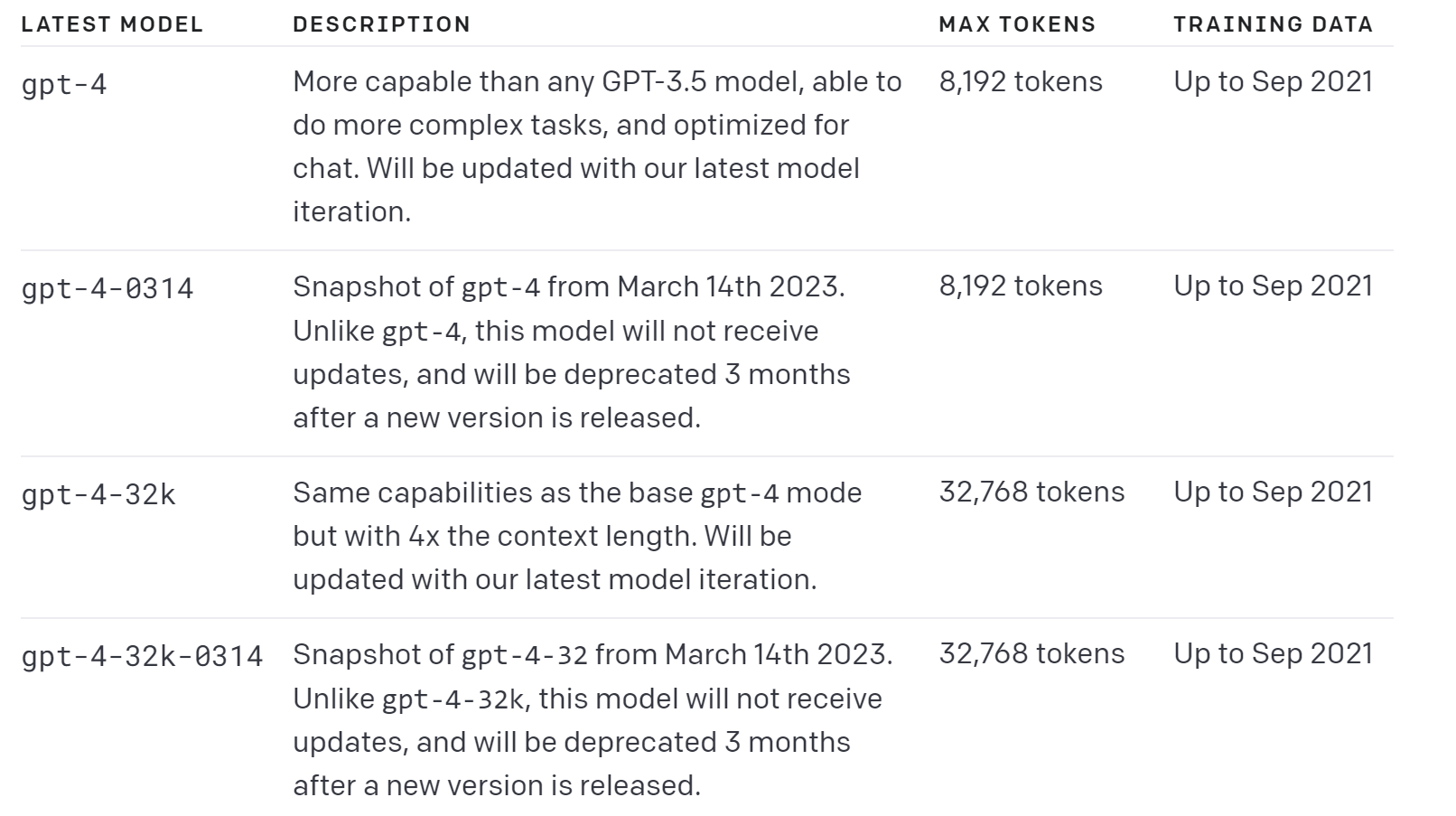

1. as of now we are offered the following options of models:

-gpt-3.5-turbo

-gpt-3.5-turbo-0301

-gpt-4

-gpt-4-0314

2 . But looking at Open AI’s models information, it’s seem like we could have access to the gpt-4-32, which would give us 4x tokens

Does my rationale makes sense? What am I missing?

I’d love to brainstorm ways to make this work. Zapier only has to gain with this GPT’s integrations.

Thanks and let's goooo!

Hi

The workaround would be to use the OpenAI API for Chat: https://platform.openai.com/docs/api-reference/chat/create

You might be able to use this Zap action: OpenAI - API Request

OR App APIs can be used in Zaps with these apps:

I know this is a lot to ask, but is there any chance you could record a step by step video on this?

I got it fixed  it will cost you an additional task thought but the solution is way simpeler then we are thinking. I am using 3.5 so it should be available for everyone.

it will cost you an additional task thought but the solution is way simpeler then we are thinking. I am using 3.5 so it should be available for everyone.

I usually use ChatGPT for writing Blogposts, and here a lot of times the text is cutoff halfway the article. I was struggling big time and hoping this was an easy fix by finetuning the prompt and asking for a limit of max words. but it didn't help. The solution i really just found is the following

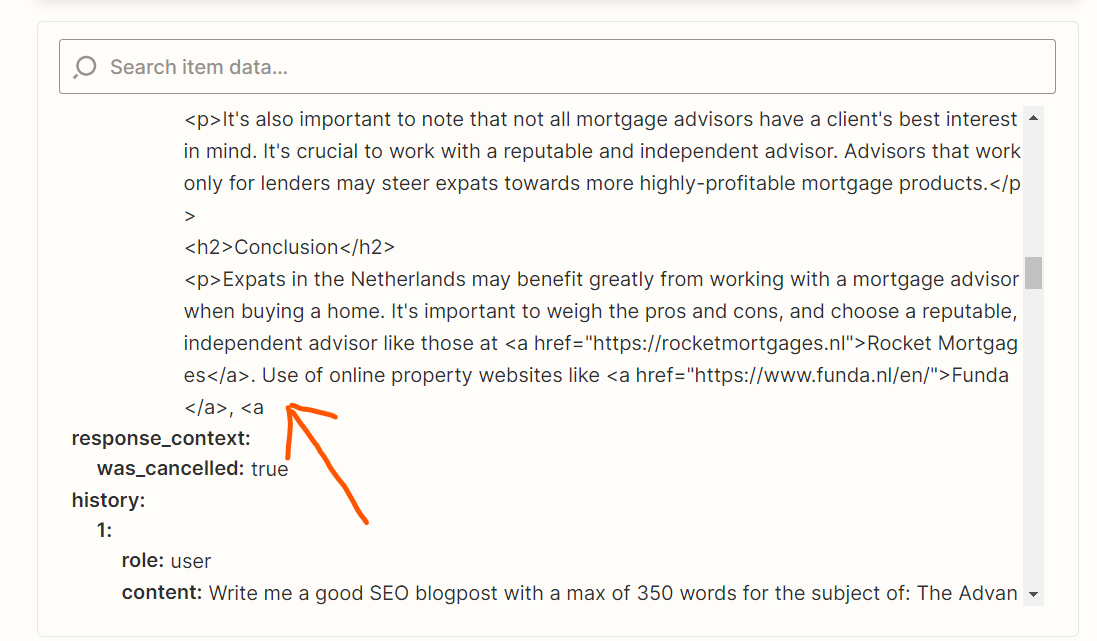

Use ChatGPT in a task and put in your full prompt, also test this to make sure the output from ChatGPT is indeed cut off.

First action

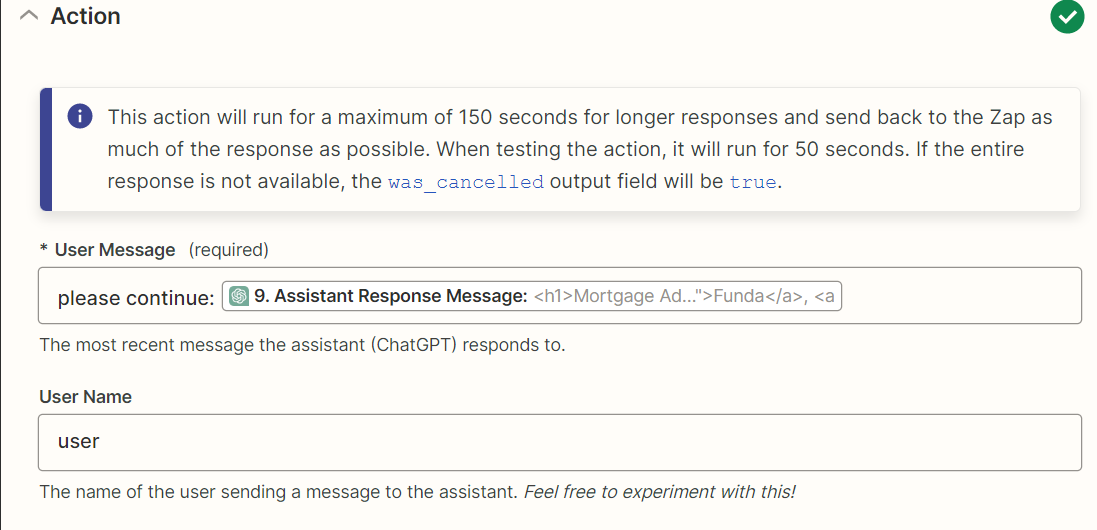

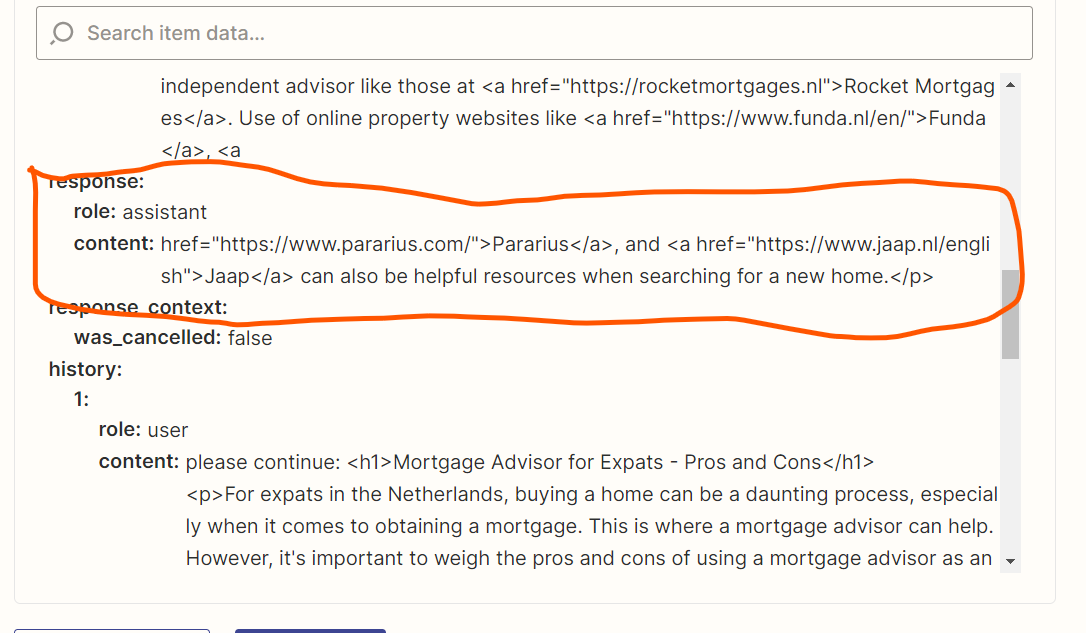

Then create a new action below, all you have to put down there is: Please continue: eput here the response from the previous answer of ChatGPT] the result is that ChatGPT will continue where it left. In that way it resolves the issue of cutting off. You can probably also automate this entire process too by replicating the actions and let continue untill "was_cancelled: False” as you can see that the first response was cancellen and the second one isn't.

The prompt

The outcome

Downside is an addtional task used and there are two responses which need to be set behind eachother.

This resolved it for me and hope for you too.

KR Bram

I found the answer that works for me:

I created a Zap-step ‘Paths’, so I could add a split. The split is for was_cancelled = true on one end, and was_cancelled = false on the other end.

was_cancelled = true leads to a chatgpt step where I message ‘continue generating’ and add the memory key of the previous chatgpt step. Then I add both answers, and send them elsewhere.

was_cancelled = false leads to just grabbing that chatgpt answer and sending it elsewhere.

Hope that helps some of you

Hi everyone!

You’ve hit on the reason for this - if it takes longer than 150 seconds for ChatGPT to provide a response, Zapier will post what there is at that point and cut off the rest. If the response is cut off, the field was_cancelled will say true.

We do have a feature request to remove the cutoff for the conversation action for the ChatGPT integration. I’ve added everyone on this post as an interested user for the request. That lets the team know how many users want to see this and also means that you’ll get an email when we have an update.

In the meantime,

Hi everyone!

You’ve hit on the reason for this - if it takes longer than 150 seconds for ChatGPT to provide a response, Zapier will post what there is at that point and cut off the rest. If the response is cut off, the field was_cancelled will say true.

We do have a feature request to remove the cutoff for the conversation action for the ChatGPT integration. I’ve added everyone on this post as an interested user for the request. That lets the team know how many users want to see this and also means that you’ll get an email when we have an update.

In the meantime,

Please add me to this feature request! If you are using gpt to face an end-user process this cutoff problem is very real.

Hey there,

Hi

The workaround would be to use the OpenAI API for Chat: https://platform.openai.com/docs/api-reference/chat/create

You might be able to use this Zap action: OpenAI - API Request

OR App APIs can be used in Zaps with these apps:

I second this I work primarily with open ai integrations instead of chat gpt as it has more functionality in my opinion. How do you exceed open ai’s token limit when you use the API documentation?

Would it be possible to share a video or show screenshots on how it was done?

Hi

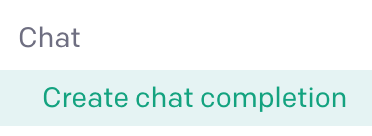

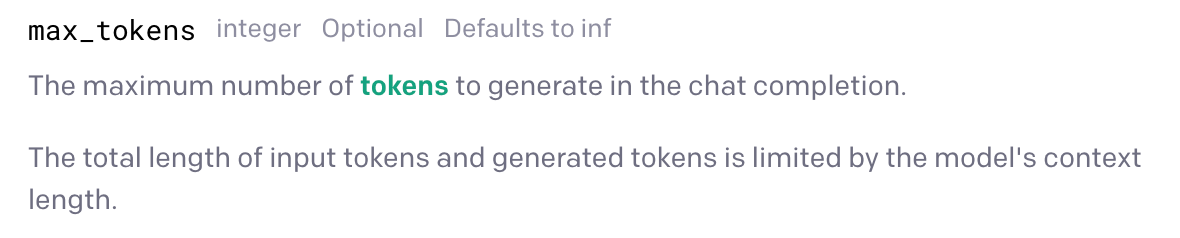

I’ve not done it myself but, as Troy mentioned, you might be able to use the OpenAI app’s API Request (Beta) action to make a call to the Create chat completion endpoint and set a value for max_tokens to give it a different token limit. In theory that would allow you to exceed the default token limit.

I don’t have a video or screenshots I can share to show exactly how you’d go about setting up that action. But we do have a more general guide on how to set up an API Request (Beta) action which you might find helpful to reference (alongside OpenAI’s API documentation): Set up an API request action

Hope that helps to get you pointed in the right direction!

Hi friends! Just following up with a update here to confirm that the feature request to remove the cutoff was closed recently!

In case you missed the email notification from us, here’s what you need to know:

“We have implemented a new version of the connection that can take up to 15 minutes to process, so there should no longer be any cut off messages in your zap runs. When testing the Zaps in the editor, you may still find that the response is cut off, because we still time out that call at 50 seconds, so that users can continue to setup their Zaps effectively.

As part of this change, you will no longer see the field `was_cancelled` set to true after a ChatGPT step runs, only during Zap testing. I hope this helps you in automating your work on Zapier.”

Happy Zapping!

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.