I created a Zap that generates Facebook post content for me based on the data I provide using chatgpt.

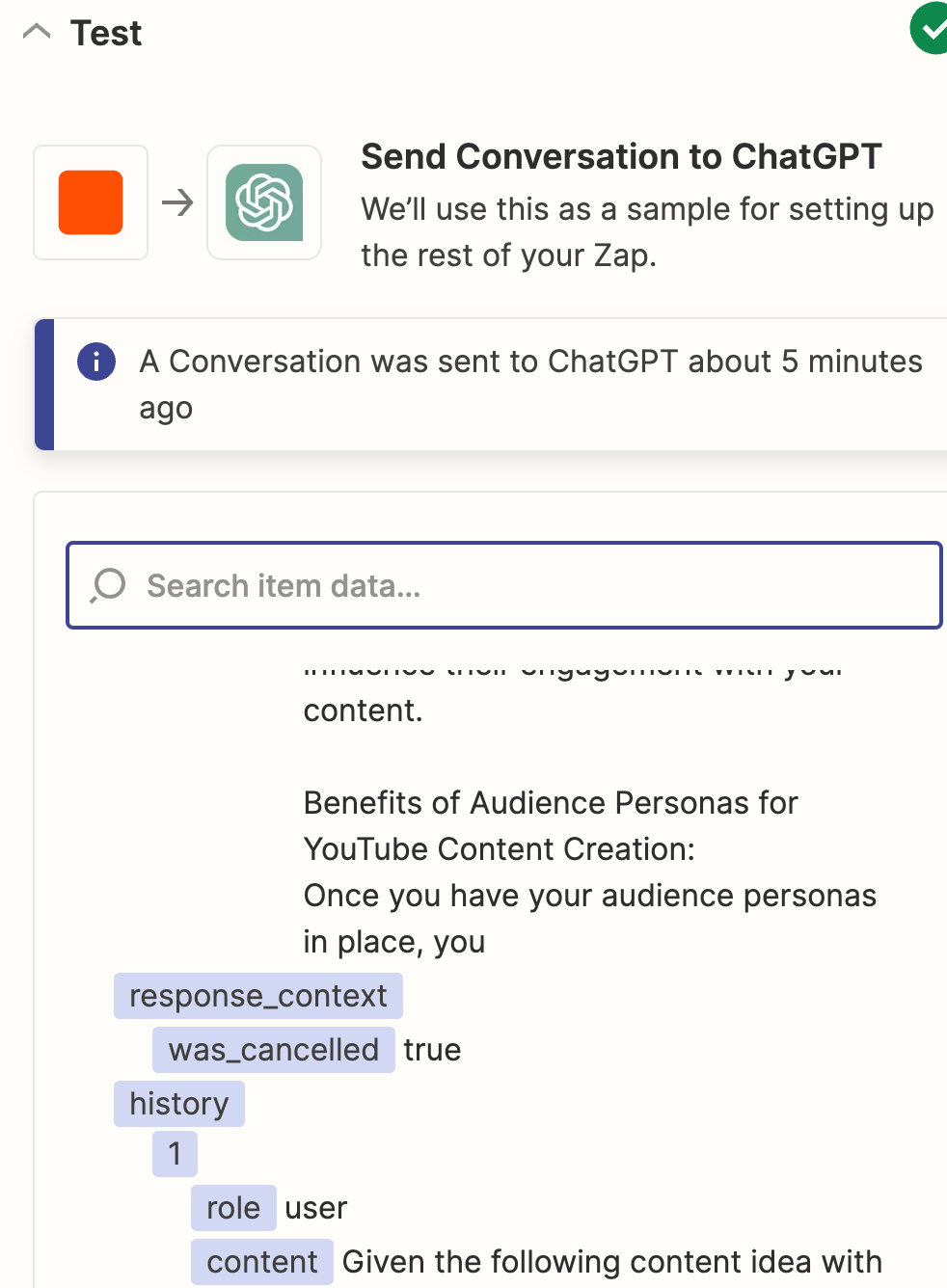

To my surprise, I see that after generating several pieces of content, ChatGPT not only generates them based on the data I sent but also the history of previous 'conversations,' which in this case are requests to generate a post.

Not only do I not need this, but it also generates uncontrolled and unnecessary usage of a substantial amount of tokens.

Is it possible to disable ChatGPT from using historical conversations while generating replay?